AI Journey 1977-2025: Part 3 of 5 Professional Applications and Breakthroughs (2005-2020)

Part 3 on AI Journey 1977-2025: Professional Applications and Breakthroughs (2005-2020)

From Therapeutic Gaming to Real-Time Educational AI That Outperformed Google

Author: William Hawkes-Robinson Series: The Path to DGPUNET and SIIMPAF Published: October 2025 Reading Time: ~16 minutes

Series Overview

This is Part 3 of a 5-part series documenting the technical evolution from early introduction to role-playing gaming in 1977 and hobby programming in 1979 to modern distributed GPU computing and self-hosted Artificial Intelligence (AI) infrastructure. The series traces patterns that emerged over four decades and shows how they apply to current challenges in AI development and computational independence. This part focuses on applying technical skills to therapeutic and educational contexts, culminating in systems that outperformed commercial alternatives.

The Series:

- Part 1: Early Foundations (1977-1990) - RPGs, first programs, pattern matching, and establishing core principles

- Part 2: Building Infrastructure (1990-2005) - IRC bots, NLP, Beowulf clusters, distributed computing, and production systems

- Part 3: Professional Applications (2005-2020) - Therapeutic gaming, educational technology, and real-time AI that outperformed commercial solutions

- Part 4: The GPU Challenge and DGPUNET (2020-2025) - GPU scarcity, centralization concerns, and building distributed GPU infrastructure

- Part 5: SIIMPAF Architecture and Philosophy - Bringing four decades of lessons together in a comprehensive self-hosted AI system

Applying Technology to Human Needs

The period from 2004 to 2020 marked a significant shift, from building small and enterprise global-scale infrastructure and tools, to applying those tools to serve specific human needs, trying to raise up the overall human condition - therapeutic interventions, education, and accessibility. This doesn't focus on my extensive neurotechnology work, to learn more about that, see Brain-Computer Interface RPG and NeuroRPG.

The technical skills from previous decades remained relevant, but the focus changed. It wasn't just about making systems work efficiently; it was about making systems work effectively for people who needed them, and helping to improve the functioning and quality of life for people. Performance still mattered, but accuracy, reliability, accessibility, security, and usability in real-world contexts mattered more.

RPG Research and Therapeutic Gaming

By the mid-2000s, I had been involved with role-playing games for nearly three decades - as a player, organizer, and increasingly as someone documenting and researching their effects. This led to more formally founding RPG Research, a nonprofit focused on researching the effects of all role-playing game formats (tabletop (TRPG), live-action (LRPG), electronic (ERPG), and hybrid (HRPG)), especially any potential for the therapeutic and educational applications of role-playing games.

Being Called "The Grandfather of Therapeutic Gaming"

The title "The Grandfather of Therapeutic Gaming" initially came from peers and colleagues in the field, not from self-promotion. As Adam Johns from Game to Grow put it when referring a potential client to me that was outside their scope of practice, he explained to that client that "been tracking and involved in the therapeutic and educational application of role-playing games longer than anyone else", it stuck, and I have since been introduced at conferences, interviews, and articles with this title, see (testimonials).

This wasn't about inventing therapeutic gaming - people had been using games therapeutically for decades before I started documenting it, though the subset of role-playing gaming is another story. Critically though, it was about systematic tracking, research, and documentation over an extended period. I'd been organizing RPG sessions in libraries, schools, and community centers since the late 1970s, researching "optimizing the RPG experience" since 1979, utilizing RPGs for educational settings since 1985, with incarcerated populations since 1989, and with many other populations for targeted therapeutic goals since 2004, working with diverse populations long before "therapeutic gaming" was a recognized field.

Technical Challenges in Therapeutic Contexts

Published as "W. A. Hawkes-Robinson" in journals and books, I have written hundreds of papers, articles, indeed even some of my own books (and interviewed or cited in others), on the RPG-focused topics, but this article, we're focusing more on the technology side, and how RPGs informed improving technology and AI development.

Working with therapeutic and educational applications of games presented specific technical needs:

1. Accessibility: Participants might have physical disabilities, cognitive differences, or sensory challenges. Systems needed to accommodate various needs without calling attention to individual differences.

2. Engagement: Therapeutic effectiveness often correlated with engagement. Technical systems needed to support immersion, not disrupt it.

3. Data collection: Understanding what worked required tracking outcomes, but tracking couldn't be intrusive or change the experience significantly.

4. Reliability: A system crash during a therapeutic session isn't just annoying - it can disrupt progress and trust.

Early AI Assistant Development

In the late 1990s and early 2000s, I began serious work on what would eventually evolve into Hawke's AI Assistant. Early versions were written in Java and focused on:

- Automatic Speech Recognition (ASR): Converting voice to text

- Natural Language Processing (NLP): Understanding intent and context

- Interactive voice responses: Text-to-speech for system responses

- Primitive AI avatar: Visual representation and animatino for more engaging interaction

These early attempts were limited by available technology. Speech recognition accuracy was poor compared to what we have now. Natural language understanding was basic. Processing power was limited. But the foundation was being established.

Integration with Second Life (circa 2009)

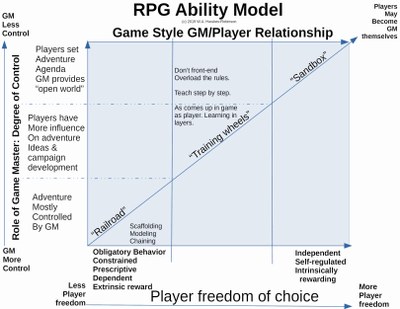

Back in the 90s I had setup and run MUDs, UOX, and various IRC gaming "worlds. In 2003 I expanded this Never Winter Nights on Linux servers with the power of the DM Client, ability to take control of directly (in addition to scripting) NPCs, and world building with Aurora toolkit, creating much closer to TRPG-style benefits in a more scalable ERPG format. Over the following years, I developed many various virtual worlds with a wide range of technologies, VR sites, and game worlds in text and full GUI environments (some roughly similar to Second Life in some ways though more focused/railroaded for specific goals, and less sandboxed until they were ready for more sandbox free-form opportuntiies) (see the RPG Ability Model portion of the Hawkes-Robinson RPG Theory Models: Hawkes-Robinson RPG Models - RPG Ability Model), optimized to help struggling high school students. Some of these platforms provided various degrees of:

- 3D virtual environment for social interaction

- Ability to practice life skills in safe contexts

- Visual avatar representation

- Real-time communication

- Individual and cooperative problem solving

- Frustration tolerance building, resilience building, and overall grit development

Relevant to these articles, the technical challenge was making the AI assistants and NPCs in these virtual environments responsive enough to support learning objectives. This combined earlier NPC work with network programming, real-time processing, and educational goals.

Participants worked on:

- Social interaction skills

- Problem-solving in various scenarios

- Cooperative task completion

- Self-advocacy and communication

- Empathy building

- Building competence to develop resilient confidence (not false confidence), increasing resilience, working toward stronger "grit"

The systems needed to provide appropriate guidance without being controlling (depending on where they were on the RPG Ability Model), recognize when participants were struggling without being intrusive, and adapt to individual learning speeds and styles. Based on one of the Therapeutic Recreation Ability Models.

Dev2Dev Portal and Virtual Office & Workplace (VOW)

Starting in 2002, I developed Virtual Office & Workplace (VOW) products and services through Dev2Dev Portal LLC. This was in responsxe to having to build this kind of infrastructure, documentation, etc. at just about every company I had worked at since 1995, and not finding other companies that could do so correctly. The goal was providing humanized remote work infrastructure before "remote work" was commonplace.

This involved:

- Secure remote access to resources

- Real-time collaboration tools

- Document management and version control

- Communication systems (voice, video, text)

- Project management integration

- Taking into account critical human needs for individual expression and group "watercooler" chat

- Opportunities to bond and grow camaraderie (including proper gamification, not the buzzword bingo lip-service you usually see)

The technical challenges were around:

- Security: Remote access without compromising organizational systems

- Performance: Usable interfaces over varied network conditions

- Reliability: Systems that worked consistently for distributed teams

- Integration: Working with existing organizational infrastructure

- Psychology: Important considerations of individual and group psychology needs

- Neuroscience: Important considerations on the limitations of human cognitive functioning, and methods to optimize

Many of these challenges seem obvious now that remote work is common, but in 2002, solutions were far less mature and organizations were less receptive to remote work as a concept. Even in 2025, from my perspective of 30+ years of remote work programs for companies large and small, around 80% of companies are "doing it wrong" with their remote worker programs, especially those that were hurriedly implemented during the COVID panic, and now used as an excuse for claiming that remote working doesn't work and are now clawing many back, when in reality it is just that they didn't implement their programs correctly (but that is a lengthy discussion for a separate series, another day).

Learning Management System Enhancements (2010+)

Starting at Franklin Covey in the late 1990s, and onward throughout the 2010s and 2020s, I worked on either building from scratch, or greatly enhancing various Learning Management Systems (LMS), including adding interactive AI agents. A pattern became clearer and more consistent:

Problem: LMS platforms were effective for delivering content but weak at interactive support and personalized assistance.

Solution: Add AI agents that could:

- Answer common questions

- Guide users through processes

- Provide personalized suggestions based on user behavior

- Flag when users appeared stuck or frustrated

- Connect users with human support when appropriate

- Try approaches that resonate with the user, no one size fits all, allow customization and randomization for better human to agent "connection", ethically

Technical approach:

- Monitor user interactions with the system

- Build behavioral profiles over time

- Use NLP for understanding questions and requests

- Provide context-appropriate responses

- Learn from successful and unsuccessful interactions

- Include cognitive neuropsychology principles

- Include a wide range of neuroscience principles

A key areas was making these additions without fundamentally changing the LMS platform. Organizations had invested significantly in these systems, and in training staff to use them. Solutions needed to enhance, not replace, existing functionality. Though in some instances, full replacement with open source solutions were actually much better options than the imploding pure-custom-code behemoths they were struggling to scale. 90/10 Rule Applied: Building 3 years and $30M Worth of EdTech in just 3 Months for only $100K

The LearningMate Breakthrough (2021-2022)

Around 2021-2022, I worked with LearningMate on a system that integrated multiple technologies to provide real-time, multilingual closed captions for K-12 educational video calls in the classroom as an alternative to Zoom, Google, etc. This project combined many lessons from previous decades and achieved results that exceeded expectations and blew away - performance, quality, security, scalability, and cost - many commercial alternatives.

The Challenge

K-12 schools needed:

- Real-time closed captions for video calls (for hearing-impaired students and second-language learners)

- Multiple language support (14 languages at that time)

- Real-time profanity filtering (school-appropriate content)

- Context-specific, must clearly indicate who is speaking in the CC and transcripts

- Low latency (captions needed to stay synchronized with speech)

- High accuracy (errors in educational contexts can mislead students)

- Reliability (system needed to work consistently for daily classroom use)

- Extremely variable scaling from just 200 concurrent users per second, to scale to at least 20,000 concurrent during peak hours (I was able to easily scale it to 60,000+)

The Technical Solution

The system integrated:

- Vosk: Open-source speech recognition

- Jitsi: Open-source video conferencing

- ASR (Automatic Speech Recognition): Real-time voice-to-text

- NLP (Natural Language Processing): Understanding and filtering content

- SIP (Session Initiation Protocol): Managing communication sessions Some of these challenges I blogged about aloing the way (in very rough form)123 Some of these challenges I blogged about aloing the way (in very rough form)123456

The Results

After significant old school "hacking" to optimize the code and the server VM pods, extensive R&D and production performance testing showed:

- 150% faster than Google's solution at the time

- 30% more accurate than Google's solution

- Support for multiple languages in real-time

- Effective profanity filtering without breaking conversation flow - and we gave this to the open source communities

- Reliable operation in daily classroom use

- Functional in both on-prem and multi-cloud hybrid environments able to scale rapidly on demand with Kubernets clusters

- Initially each server component required 4x vCPUs and 8-16GB RAM, a cluster of 3 servers for each classroom! This was too expensive and not scalable, but finally we were able to whittle it down to just 1 vCPU and 512MB-1GB per server instance, while also improving performance and quality!

Why It Worked

Several factors contributed to outperforming a commercial solution from a company with vastly more resources:

1. Domain-specific optimization: While initially intended to be domain-specific to just k-12, as we kept iterating and optimizing, it turned out to also be useful for our day-to-day development team meetings (I was Team Lead for more than 30 staff on that project, though they were mostly focused on the LMS components and I was focused on all of the video conferencing, ASR, NLP, AI, and related components myself (though for a few months I had a great neuroscience intern, Alex, that I took under my wing, to help with some one of the R&D phases)). Most of the team members were in various parts of Mumbai specially, and India more broadly, and were unable to understand each other in their own dialects or English accents, they found the CC was able to work very well to make it easier to understand each other much more clearly, greating reducing misunderstandings. We quickly dropped using other products for our meetings, and "ate our own dogfood" which only helped further improve the product. We gave back source code and help to the Jitsi, Prosody, Cosk and other open source commmunities so that many of these improvements became part of the core product (making it easier for us to maintain and update too). Rewriting EdTech's Rules

2. Open-source integration: By combining multiple open-source components rather than building everything from scratch or licensing commercial solutions, development could focus on integration and optimization rather than core functionality. 80-90+% of projects and companies don't need 100% custom-coding!

3. Practical constraints: K-12 requirements included profanity filtering and specific language support that general-purpose systems didn't prioritize. Building these in from the start rather than adding them later made them more effective.

4. Real-world testing and refinement: The system was developed in close coordination with actual daily classroom use of more than 20,000 people. Feedback came from real teachers and students, not theoretical use cases.

5. Focused scope: The system (at least the portions I worke don) didn't try to do everything - I focused on doing video conferencing in an educational setting and including closed captioning in educational video calls exceptionally well. This wasn't hyperspecialization though. A specialist wouldn't have been able to achieve this. It took someone like me, a deep and broad generalist, to be able to solve these issues effectively. Prior to my successful engagement, every specialist they had thrown at the probblem, for several years, had failed to deliver.

Technical Lessons

The LearningMate project reinforced several principles:

Appropriate technology matters more than advanced technology: Vosk wasn't the most sophisticated speech recognition system available, but it worked well for our use case, was reliable, and because it was open source, it could be optimized.

Integration skill is often more valuable than component selection: The components we used were all available to anyone. The value was in understanding how to take many seemingly disparate components and having the vision and experience (and R&D budget) to find the best ways for integrating them effectively for specifical goals.

Open source enables customization: We could modify components as needed, train models on relevant data, and optimize for specific hardware. Commercial solutions didn't offer anywhere near this flexibility.

Real-world testing identifies issues that theory doesn't: "Dogfooding" and effective user issue management systems, helped to make problems that seemed manageable in development and became critical in actual classroom use. Conversely, some concerns that seemed important to product managers or technical staff, sometimes turned out not to matter at all in practice (unnecessary use or edge cases).

Early AILCPH Development

Back around 2005-2020, and again during this period, I would periodically pick up and set down, work on what would become the AI Large Context Project Helper (AILCPH), which would later evolve into SIIMPAF.

The initial goal was managing large technical projects with extensive documentation, dependencies, and complex requirements. Projects with hundreds or thousands of files, multiple interdependent components, and documentation that needed to stay synchronized with code.

Core capabilities needed:

- Ingesting and indexing large document sets

- Understanding relationships between documents

- Answering questions about project structure and dependencies

- Suggesting relevant documentation when code changes

- Tracking decisions and rationale over time

- Helping new contributors understand project context

- AI Retrieval-Augmented Generation (RAG)

- AI Model Context Protocol (MCP)

- Project-wide storage, retrieval, sourcing, searching, understanding, and trying to get to actual grokking (which AI LLMs DO NOT DO!)

This required:

- Document processing and chunking strategies

- Vector databases for semantic search

- Local AI models for understanding and generation

- Efficient indexing and retrieval

- Context management across long interactions

- Complex chaining

- Chain-failure fault-tolerance resilience

- Meaningful clarity in error messaging when "something goes wrong"

- Large context capacity, but more than just raw capacity, actually trying to get the tool to grok the project meaningfully

The patterns from decades of previous work all converged here:

- Early tabletop role-playing games exposing complex systems and coming up with working solutions

- Natural language processing from IRC bots and chatbots

- Distributed computing from Beowulf clusters and WISP infrastructure

- Real-time processing from LearningMate

- Reliability requirements from production systems

- Integration of multiple open-source components

This convergence of skills across seemingly unrelated domains - therapeutic gaming, network infrastructure, educational technology, AI development - created unique problem-solving capabilities (The Convergence Executive: Why Tomorrow's Leaders Need Impossibly Broad Expertise).

Patterns From 2005-2020

Several themes dominated this period:

Application Over Infrastructure

While infrastructure work continued, the focus shifted to applying technology to specific human needs. Technical excellence still mattered, but in service of measurable improvements in people's lives.

Open Source Integration

From LMS enhancements to LearningMate to AILCPH, the pattern was integrating existing open-source components rather than building everything from scratch. The value was in:

- Selecting appropriate components

- Integrating them effectively

- Optimizing for specific use cases

- Contributing improvements back to upstream projects

Domain Expertise Matters

Technical solutions improved significantly when informed by deep understanding of the domain - therapeutic gaming, K-12 education, project management. General-purpose solutions were rarely optimal for specific contexts.

Measurement and Evidence

Especially in therapeutic and educational contexts, demonstrating effectiveness required:

- Clear metrics for validity and reliability

- Controlled testing

- Comparison against alternatives

- Honest assessment of limitations

- Willingness to rapidly iterate and change approaches based on results

The LearningMate results (150% faster, 30% more accurate than Google) weren't marketing claims - they were measured results from practical testing in actual classrooms in 1:1 real-world comparisons.

Looking Forward

By 2020, I had:

- Decades of experience with distributed computing and global enterprise network infrastructure across 6 continents and 150+ countries

- Extensive natural language processing and chatbot development

- Success integrating open-source components to solve specific problems

- Experience with real-time processing in production contexts

- Background in therapeutic and educational applications

- Beginning work on systems for managing large-scale technical projects

The next phase would confront a new challenge - the AI boom of the early 2020s created unprecedented demand for GPU resources, leading to scarcity and pricing that threatened to centralize AI development in the hands of a few large organizations.

This challenge would lead directly to DGPUNET - applying lessons from Beowulf clusters in the 1990s to modern GPU computing, creating a distributed alternative to centralized cloud computing for AI development.

That's where Part 4 picks up - the GPU shortage, AWS and other cloud-providers anti-competitive practices, and building DGPUNET as a response to the increasing regression back to the bad old 1970s mainframe days, the threat of centralized control over all data, networking, computing, and AI infrastructure.

Next in Series: Part 4: The GPU Challenge and DGPUNET (2020-2025) - GPU scarcity, privacy and centralization concerns, and building a distributed alternative

Previous in Series:

- Part 1: Early Foundations (1977-1990) - RPGs, first programs, pattern matching, and establishing core principles

- Part 2: Building Infrastructure (1990-2005) - IRC bots, NLP, Beowulf clusters, distributed computing, and production systems

- Part 3: Professional Applications (2005-2020) - Therapeutic gaming, educational technology, and real-time AI that outperformed commercial solutions

- Part 4: The GPU Challenge and DGPUNET (2020-2025) - GPU scarcity, centralization concerns, and building distributed GPU infrastructure

- Part 5: SIIMPAF Architecture and Philosophy - Bringing four decades of lessons together in a comprehensive self-hosted AI system

About This Series: This is Part 3 of a 5-part series documenting the technical evolution from early hobby programming to DGPUNET (Distributed GPU Network) and SIIMPAF (Synthetic Intelligence Interactive Matrix Personal Adaptive Familiar). The series focuses on practical problems and solutions, avoiding marketing language in favor of technical accuracy and honest assessment.

About the Author: William Hawkes-Robinson has been developing software since 1979, with focus areas including distributed computing, natural language processing, educational technology, and therapeutic applications of gaming. He is the founder of RPG Research and Dev2Dev Portal LLC, and is known internationally as "The Grandfather of Therapeutic Gaming" for his long-running work applying role-playing games to therapeutic and educational contexts.

Contact: hawkenterprising@gmail.com

Website: https://www.hawkerobinson.com

Tech Blog: https://techtalkhawke.com

RPG Research: https://RpgResearch.com

RPG Therapeutics LLC: https://rpg.llc

Dev2Dev Portal: https://dev2dev.net

Version: 2025.10.17-1245